In this blog post, I will discuss Amazon Elastic File System (EFS) in conjunction with AWS Backup. It's quite possible that when using both EFS and AWS Backup, you may inadvertently create duplicate backups, leading to double AWS Backup costs. In this article, I will explain why this happens and suggest a solution to monitor this using an AWS Config Custom Rule.

What Are EFS and AWS Backup?

Amazon Elastic File System (EFS) is a managed file system service that provides scalable, elastic storage for use with AWS services and on-premises resources. It allows multiple instances to access data concurrently, making it ideal for use cases like big data and analytics, media processing workflows, and content management.

AWS Backup is a fully managed service that centralizes and automates data protection across AWS services. It simplifies the process of backing up and restoring data, ensuring business continuity and compliance with regulatory requirements.

The "Trap" of Double Backup Costs

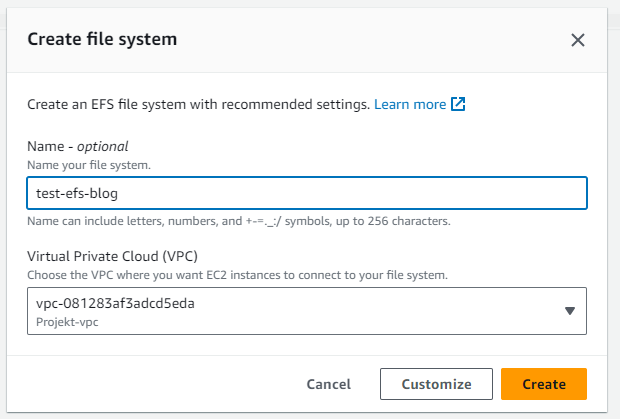

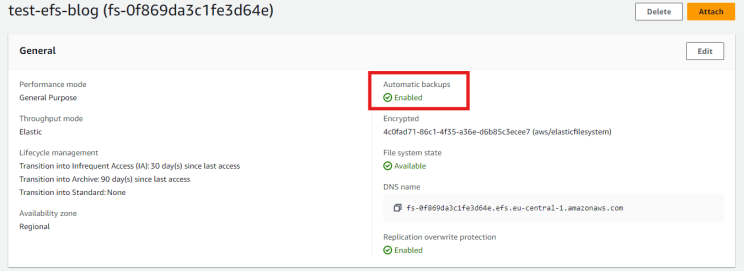

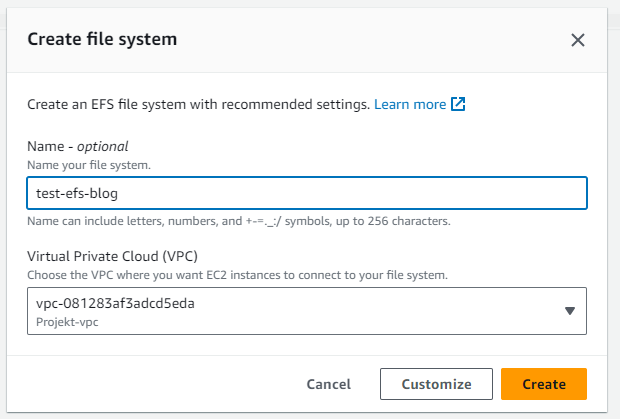

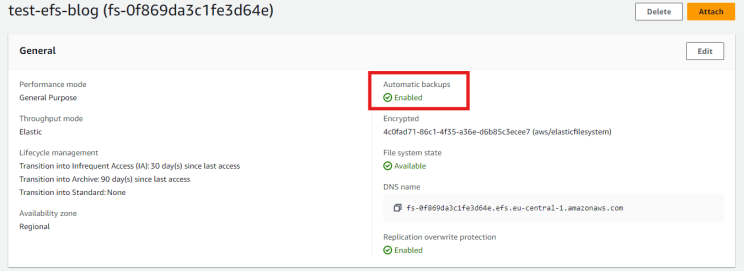

When a new Amazon Elastic File System (EFS) is created, the "Automatic backups" feature of EFS is enabled by default. This results in the creation of an AWS Backup Plan named "aws/efs/automatic-backup-plan" and a Backup Vault named "aws/efs/automatic-backup-vault", where the backups are automatically stored with a total retention period of 5 weeks.

Creation wizard for creating an EFS file system

Automatic Backups are enabled by default

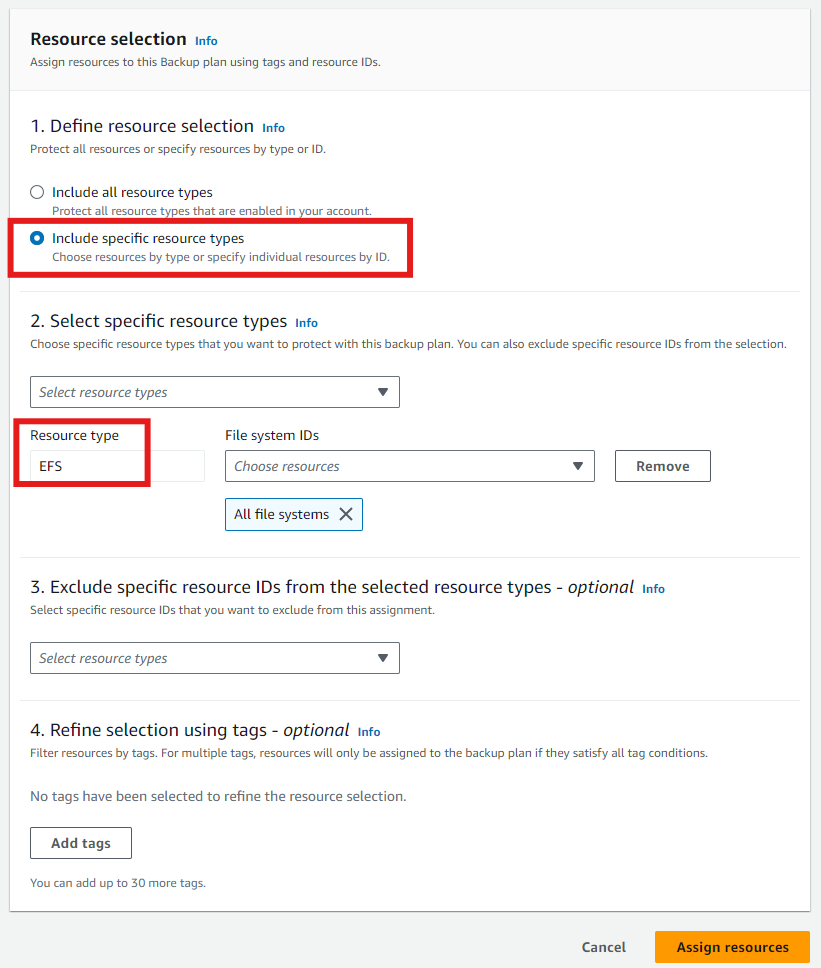

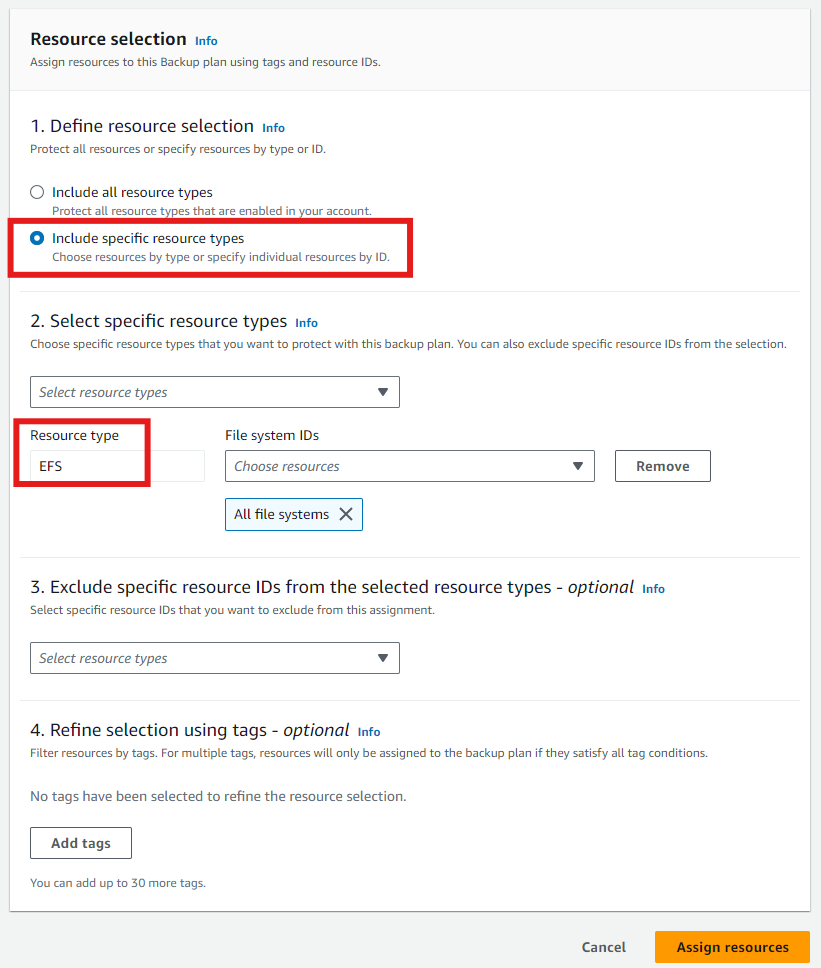

However, if AWS Backup Plans are also rolled out across all accounts in the AWS Organization, or if the account user sets up additional AWS Backup Plans that include the EFS either by resource type or via tags, then the EFS file system gets backed up into different Backup Vaults. This redundancy results in double AWS Backup costs.

Creating an AWS Backup Plan

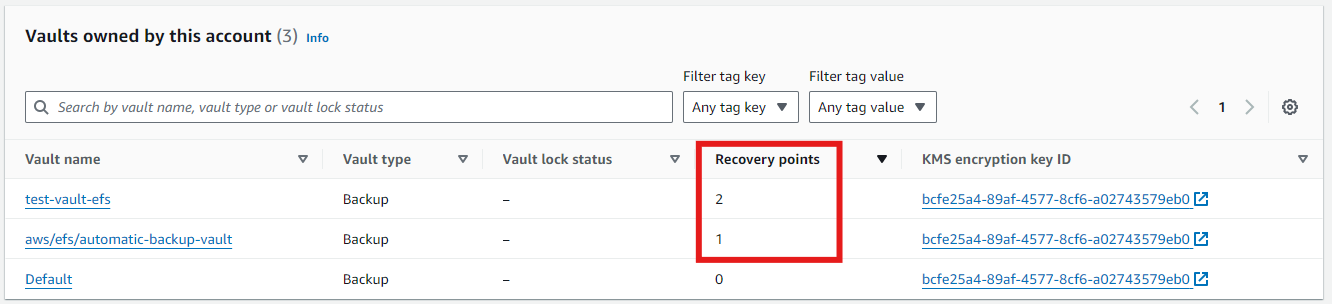

In this example, I created two EFS file systems: one with "Automatic Backups" enabled and one without. Additionally, all EFS file systems are backed up using a custom AWS Backup Plan. As a result, overnight, two backups were stored in the backup vault "test-vault-efs" and one in the "Automatic Backup" vault "aws/efs/automatic-backup-vault".

How Can We Monitor for Duplicate Backups for EFS File Systems?

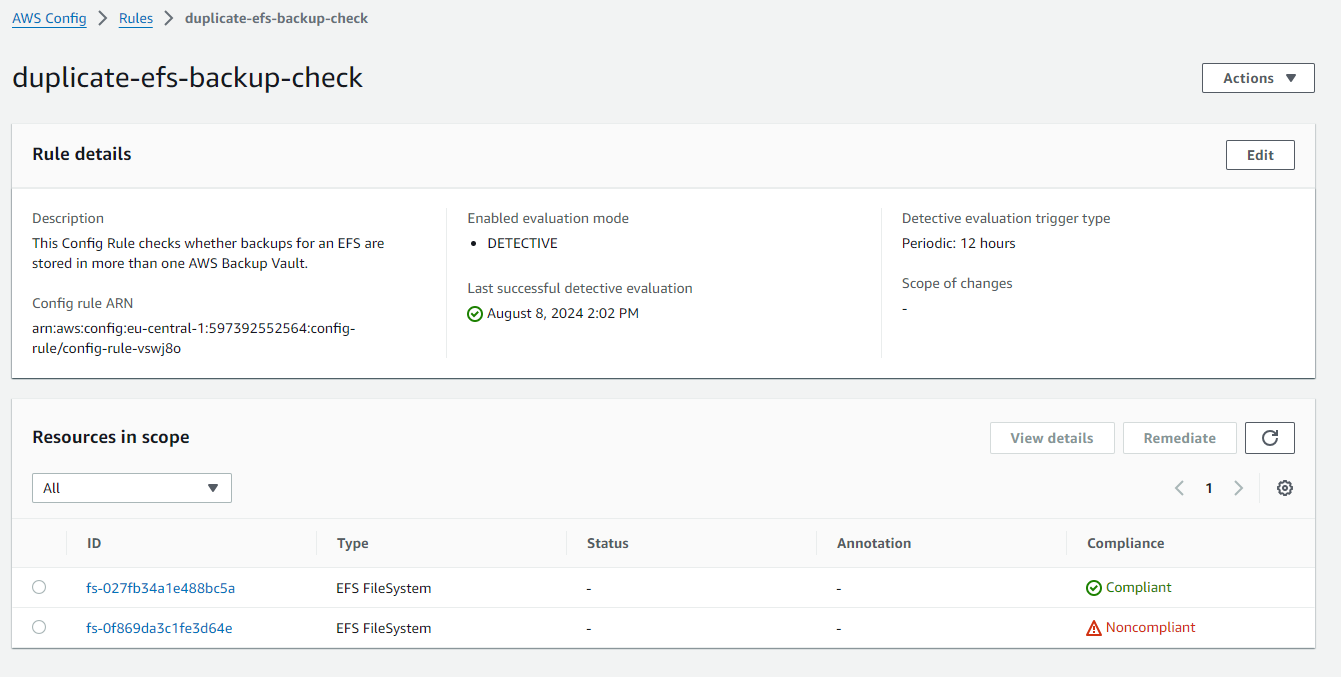

To monitor whether duplicate backups are being created for EFS file systems, I have created an AWS Config Custom Rule. This rule checks every 12 hours to see if any EFS file systems have recovery points in more than one backup vault. If so, the EFS is marked as noncompliant.

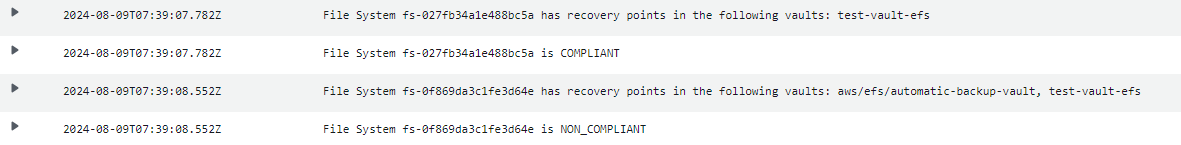

Here’s an example with my two test EFS file systems, where one is compliant and the other is noncompliant.

If EFS file systems with duplicate backups are found (i.e., labeled as noncompliant), you can simply disable the EFS automatic backup for these systems.

Additionally, the associated AWS Lambda function generates a log that shows exactly which EFS file systems have backups in which backup vaults.

Below is the CloudFormation template that creates the Lambda function for the check, the corresponding IAM role, as well as the AWS Config Rule that runs every 12 hours.

This stack can either be deployed directly in your AWS account, or across the entire AWS Organization via CloudFormation StackSet or solutions like CfCT or LZA

or LZA . If the AWS Config Aggregator is set up in your environment, you also have the ability to check for duplicate backups of EFS file systems across the entire AWS Organization.

. If the AWS Config Aggregator is set up in your environment, you also have the ability to check for duplicate backups of EFS file systems across the entire AWS Organization.

AWSTemplateFormatVersion: '2010-09-09'

Description: >

CloudFormation Template to create a Lambda function, IAM role, and AWS Config Rule to check if duplicate EFS backups exist.

Resources:

# IAM Role for Lambda

DuplicateEFSBackupCheckRole:

Type: 'AWS::IAM::Role'

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: "Allow"

Principal:

Service:

- 'lambda.amazonaws.com'

Action:

- 'sts:AssumeRole'

Policies:

- PolicyName: 'DuplicateEFSBackupCheckPolicy'

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: 'Allow'

Action:

- 'logs:CreateLogGroup'

- 'logs:CreateLogStream'

- 'logs:PutLogEvents'

Resource: '*'

- Effect: 'Allow'

Action:

- 'elasticfilesystem:DescribeFileSystems'

Resource: '*'

- Effect: 'Allow'

Action:

- 'backup:ListBackupVaults'

- 'backup:ListRecoveryPointsByBackupVault'

Resource: '*'

- Effect: 'Allow'

Action:

- 'config:PutEvaluations'

Resource: '*'

# Lambda Function

DuplicateEFSBackupCheckLambda:

Type: 'AWS::Lambda::Function'

Properties:

Handler: 'index.lambda_handler'

Role: !GetAtt 'DuplicateEFSBackupCheckRole.Arn'

Code:

ZipFile: |

import boto3

from botocore.exceptions import ClientError

import json

def lambda_handler(event, context):

message = json.loads(event['invokingEvent'])

if message['messageType'] == 'ScheduledNotification':

handle_scheduled_config(event, message, context)

else:

print(f'Unexpected event {event}.')

def handle_scheduled_config(event, message, context):

efs_client = boto3.client('efs')

backup_client = boto3.client('backup')

# Get List of all EFS File Systems

file_systems = efs_client.describe_file_systems()

# Iterate over the EFS File Systems

for fs in file_systems['FileSystems']:

compliance_status = 'COMPLIANT'

file_system_id = fs['FileSystemId']

region = boto3.session.Session().region_name

account_id = context.invoked_function_arn.split(":")[4]

# Construct the File System ARN

file_system_arn = f'arn:aws:elasticfilesystem:{region}:{account_id}:file-system/{file_system_id}'

# Get list of all backup vaults

backup_vaults = backup_client.list_backup_vaults()

recovery_point_count = 0

vaults_with_recovery_points = []

# Iterate over each backup vault

for vault in backup_vaults['BackupVaultList']:

vault_name = vault['BackupVaultName']

# List recovery points for the file system in each vault

try:

recovery_points = backup_client.list_recovery_points_by_backup_vault(

BackupVaultName=vault_name,

ByResourceArn=file_system_arn

)

if 'RecoveryPoints' in recovery_points and recovery_points['RecoveryPoints']:

recovery_point_count += 1

vaults_with_recovery_points.append(vault_name)

except ClientError as e:

print(f"Error describing recovery points for vault {vault_name} and file system {file_system_id}: {e}")

continue

# Print the vaults with recovery points for the current file system

if vaults_with_recovery_points:

print(f"File System {file_system_id} has recovery points in the following vaults: {', '.join(vaults_with_recovery_points)}")

# If the file system has recovery points in more than one backup vault, mark as NON_COMPLIANT

if recovery_point_count > 1:

print(f"File System {file_system_id} is NON_COMPLIANT")

compliance_status = 'NON_COMPLIANT'

else:

print(f"File System {file_system_id} is COMPLIANT")

# Send evaluation result to AWS Config

send_evaluation_result(file_system_id, compliance_status, message['notificationCreationTime'], event['resultToken'])

def send_evaluation_result(resource_id, compliance_status, timestamp, result_token):

# Prepare evaluation result

evaluation_result = {

'ComplianceResourceType': 'AWS::EFS::FileSystem',

'ComplianceResourceId': resource_id,

'ComplianceType': compliance_status,

'OrderingTimestamp': timestamp

}

# Create AWS Config client

config_client = boto3.client('config')

try:

# Put the evaluation result to AWS Config

config_client.put_evaluations(

Evaluations=[evaluation_result],

ResultToken=result_token

)

except ClientError as e:

print(f"Error sending evaluation result to AWS Config: {e}")

Runtime: 'python3.12'

Timeout: 300

# Lambda Permission

DuplicateEFSBackupCheckLambdaPermission:

Type: 'AWS::Lambda::Permission'

Properties:

FunctionName: !GetAtt 'DuplicateEFSBackupCheckLambda.Arn'

Action: 'lambda:InvokeFunction'

Principal: 'config.amazonaws.com'

SourceAccount: !Sub ${AWS::AccountId}

# AWS Config Rule

DuplicateEFSBackupCheckConfigRule:

Type: 'AWS::Config::ConfigRule'

Properties:

ConfigRuleName: 'duplicate-efs-backup-check'

Description: 'Checks if an EFS file system has backups in more than one backup vault.'

Scope:

ComplianceResourceTypes:

- 'AWS::EFS::FileSystem'

Source:

Owner: 'CUSTOM_LAMBDA'

SourceIdentifier: !GetAtt 'DuplicateEFSBackupCheckLambda.Arn'

SourceDetails:

- EventSource: 'aws.config'

MessageType: 'ScheduledNotification'

MaximumExecutionFrequency: 'Twelve_Hours'

Why Not Use an SCP?

A question might arise: If AWS Backup Plans are being automated across the AWS Organization to backup all EFS file systems, why not simply use an SCP (Service Control Policy) to disable EFS automatic backups? As of now (August 2024), it is unfortunately not possible to create an SCP that prevents EFS automatic backups from being enabled.

If your AWS Organization uses centrally managed AWS Backup Plans to backup all EFS file systems, you can utilize my AWS Config Custom Rule in conjunction with an AWS Config Remediation action to disable EFS automatic backups.

Final Words

I hope you enjoyed my article and find my AWS Config Custom Rule useful for monitoring duplicate EFS backups in your environment. The potential cost savings can be significant, I was able to save a client as much as $12,000 per month using this approach! Should you need further assistance with AWS cost optimization, our team at PCG is always ready to help.

Check out our AWS Cost Management and Optimisation!

Feel free to share your feedback and thoughts on the topic!

or LZA

. If the AWS Config Aggregator is set up in your environment, you also have the ability to check for duplicate backups of EFS file systems across the entire AWS Organization.