Amidst the vast array of AWS cloud services, AWS Lambda stands out as an easy-to-use, "serverless" computing platform that lets you run your applications without having to provision or manage servers.

It's a great offering to get results quickly, but like any good tool, it needs to be used correctly. I've wandered through the sprawling Lambda landscape and stumbled every now and then.

AWS Lambda: easy to use, difficult to master

Being innovative often goes hand in hand with mistakes, and indeed I've experienced quite a few in the AWS Lambda space. Let's take a look at my personal top missteps.

Lambda configurations with maximum power

When testing newly created Lambda functions, you often encounter the same problem. The default timeout value is 3 seconds and is often not sufficient.

A quick solution is to increase the value to the maximum of 15 minutes. The same applies to the RAM memory: if too much data is loaded, the function aborts because the memory is full. Here too, the problem can be solved quickly by increasing the RAM memory to the maximum.

The risk: You do not notice if lambda functions are programmed inefficiently. Instead, set the limits so that unusual behavior leads to a crash. This is much better and more sustainable from a cost perspective (and also from a security perspective). Use a test environment with the same values as in the production environment and use load tests with reference data to subsequently determine the correct values for your Lambda configuration with the help of AWS CloudWatch.

“Reserved Concurrency” equals “Provisioned Concurrency”

At the beginning of my work, I naively assumed that "Reserved Concurrency" was synonymous with "Provisioned Concurrency". I couldn't have been more wrong. Both mechanisms determine the scaling of your lambda functions, but with a different goal:

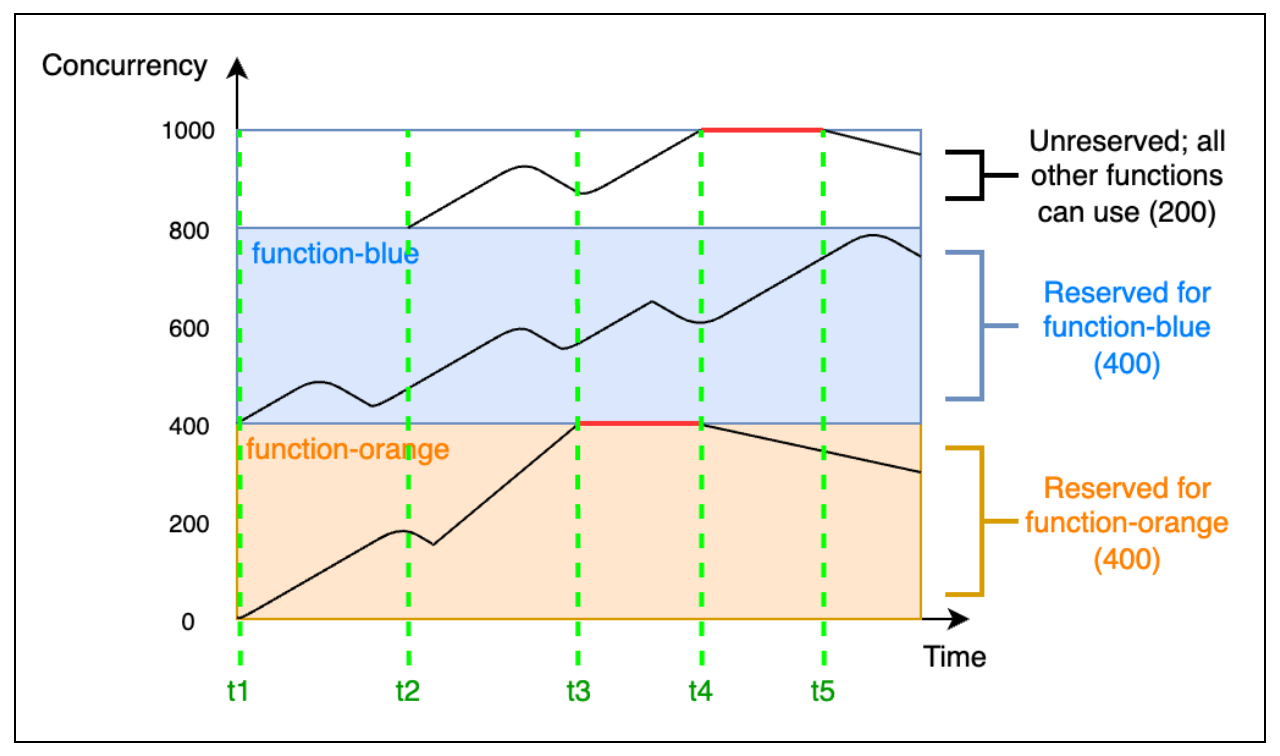

“Reserved Concurrency”

Each AWS account has an account-wide limit (soft limit) for the maximum number of simultaneously active Lambda functions - depending on the selected region. Once this limit has been reached, no further Lambda function can be started until another one is terminated. With automatic scaling, this limit can be reached faster than you think, especially if you use AWS SQS.

With "Reserved Concurrency", I can reserve any number of instances for individual Lambda functions so that they can always be started, no matter how high the load is otherwise. However, the function is also limited to this maximum number.

Important to understand: on the other hand, this reservation is no longer available for other Lambda functions. It is therefore deducted from the total quota of the account.

The following image illustrates how this works:

Source: https://docs.aws.amazon.com/lambda/latest/dg/lambda-concurrency.html

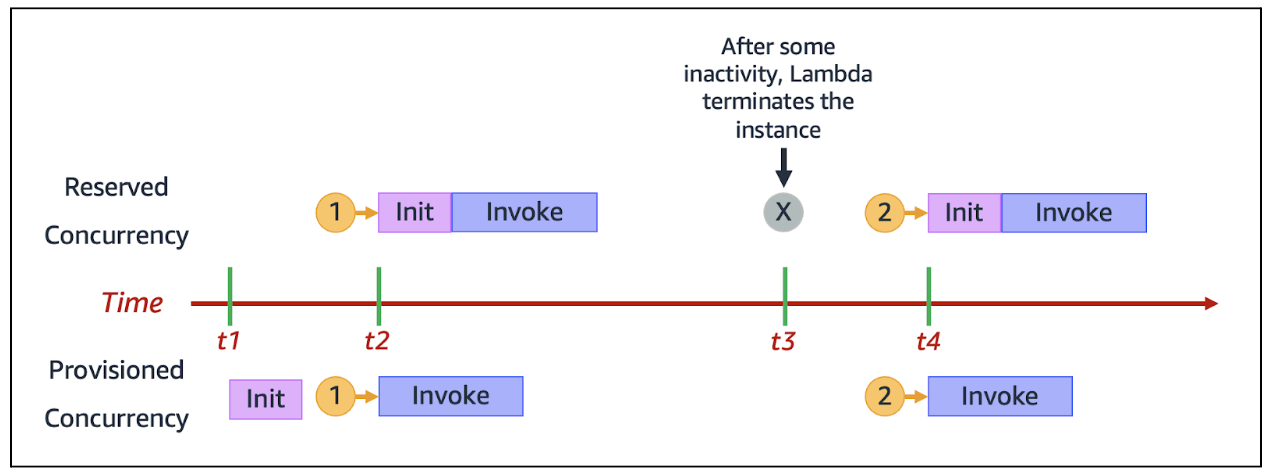

“Provisioned Concurrency”

This value is about how many instances of the function should be kept permanently "warm" - i.e. initialized. This means that the initialization phase can always be skipped.

Source: https://docs.aws.amazon.com/lambda/latest/dg/lambda-concurrency.html

This value can be a quick win for performance, but it also increases costs. Let's be honest: using "serverless" to benefit from the "pay-as-you-use" concept, only to then provide your own functions permanently and pay for them? You should think twice about that.

By the way: With the release of “Lambda SnapStart”, the problem of cold starts has been solved anyway.

Cope peak loads with increased "provisioned concurrency"

In the context of my projects, it has happened that we have had to absorb particularly high load peaks without having the time to optimize the code. Unfortunately, it is extremely useful to counter this problem with an enormous number of "provisioned concurrency", as this can generate an enormous increase in performance. Without any effort.

Nevertheless, this approach should be carefully considered: the additional costs incurred can be enormous. More importantly, this does not solve the problem in the long term. It is more important to adapt your architecture accordingly in order to be able to cope with peak loads without this "trick".

“Reserved Concurrency” for each Lambda function

A common mistake made by beginners is to assign each lambda function its own "Reserved Concurrency". It seems to be the logical consequence once you have understood what this value does.

In reality, not every function needs this value. The assignment of "Reserved Concurrency" has an impact on the entire AWS account and at the same time cannot be viewed centrally. There is no overview of which functions use how much of the reservation - so it is easy to make incorrect configurations. So: Please only use "Reserved Concurrency" for absolutely critical Lambda functions that must work in any case. This configuration should then be documented centrally in your system to ensure an overview.

All other functions can be managed via AWS step functions or services such as SQS. These services wait automatically if the account-wide limit has been reached and start the function automatically as soon as capacity is available again.

Direct and synchronous calls between Lambda functions

When you start using AWS Lambda functions, it makes perfect sense to connect Lambda functions directly with each other. In short: A Lambda function calls another Lambda function and waits for it to be processed before continuing to work with the result. Although easy to implement, this approach should be avoided at all costs. The following problems arise from direct calls:

- The calling lambda function must "actively wait", so unnecessary costs are incurred

- The calling lambda function itself still has its own timeout. It is therefore possible that this lambda function terminates itself while the other one is still working.

- In this case, the called function would still work until the end, but the result can no longer be returned and eventually disappears.

- Scaling is not guaranteed: If the called function no longer has any available "concurrency", it cannot start.

In this case too, the solution is to use AWS Step Functions or asynchronous calls with the help of AWS SQS.

AWS SQS: “Batch Size” set to 1

We have now seen some cases that would work much better with the use of AWS SQS. However, there is another common mistake right here: the "Batch Size" setting. This setting regulates how many messages the SQS queue can send simultaneously to a single Lambda function. This results in several aspects:

- Messages that are sent to an AWS SQS queue should always represent a single task. Avoid sending a JSON array consisting of several tasks as a message, for example. Because...

- … this is exactly what the AWS SQS setting "Batch Size" is for: this packs several messages together and sends them to the corresponding Lambda function.

- Not to be underestimated: the code of the Lambda function must be designed for this. Depending on the use case, it may take some effort to enable the lambda function to process several requests in succession (caching, already initialized variables, etc.).

- Please consider the timeout you need to deal with a corresponding batch size. Does your function need 1 minute for a task? Then it will probably take longer for 5 calls.

- But be careful: As already discussed, do not make the mistake of simply setting the timeout to 15 minutes.

- If a Lambda function is terminated in the middle of a batch job (timeout, code error, etc.), the SQS Queue takes all messages back into the queue.

As you can see, it is very important that Lambda functions can always track whether tasks have already been processed or not, in order to avoid multiple processing.

AWS Lambda code for orchestration

I keep seeing Lambda functions that are used to wait for a certain status or value in order to react to it. Obviously, Lambda is not designed for this. The function causes continuous costs and is limited to a timeout of up to 15 minutes. Please avoid using Lambda functions for this purpose. "Lambda Trigger" or AWS Step Functions can be a useful alternative.

AWS Lambda “Reverse Calling”

Is the timeout of 15 minutes simply too short? How about if we calculate in the code how long the function has been running so that we can call the function again with the same payload just before the timeout hits. Unfortunately, this approach is used again and again and should be avoided at all costs. If you have reached this point, using AWS Lambda is often the wrong idea. Here are a few possible solutions:

- Try to divide the task into smaller aspects and build several lambda functions.

- Make use of AWS Step Functions.

- If the duration cannot be reduced, use AWS ECS instead.

Conclusion

In this blog article, we have looked at some cases from my personal experience that prevent optimal use of AWS Lambda. Basically, it can be summarized:

- Always use AWS Lambda in the sense of a micro-service architecture.

- Use AWS SQS or other methods to connect the functions asynchronously with each other.

- Be extremely sparing and careful when using "Reserved Concurrency" and "Provisioned Concurrency".

- Make sure that your Lambda functions can always keep track of which tasks have already been processed to avoid multiple calls.

- Be aware of the "timeout" setting for each individual function and do not trick this mechanism.

Do you need support in using AWS Lambda?

Contact us and benefit from our cloud and application development experience.